Uncovering limitations of Gemini over OpenAI

Recently, while developing my AI agent library, agentai, I introduced a new CI pipeline to run examples against various models for each pull request. This process ensures that all elements of the library work correctly with each new release. I started by running these tests using GitHub Models, primarily for convenience (as they are already integrated) and to enable external contributors to use the same test suite in the same environment.

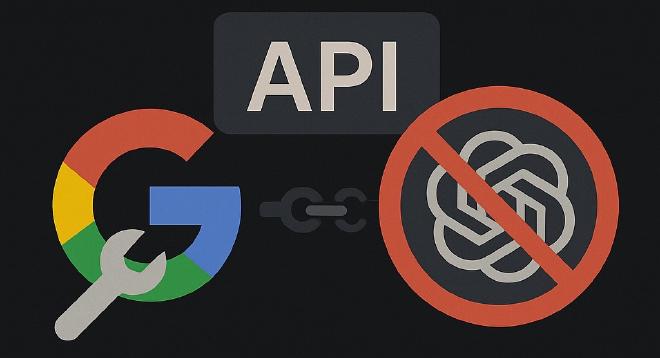

The next step was to add Gemini models to the testing matrix. I’ve learned from past experience that different LLM providers have different limitations on their APIs. In theory, everybody offers a consistent set of features like structured output and function calling. However, even when providers adhere to the same standards, discrepancies can arise.

For example, I previously identified that the Ollama API didn’t follow the JSON Schema standard, limiting the enum type to only strings, while the official documentation allows for other types and even mixed types within the same enum. This issue has since been fixed. I encountered similar problems with Google AI Studio, which rejects schemas where "$schema" is explicitly declared, requiring me to make adjustments in agentai.

Returning to the main topic, I attempted to run the examples using Gemini. To simplify the process, I reused the existing code designed for OpenAI API-compatible models. I added the new Gemini model, set the base_url to the official OpenAI-compatible API endpoint, and started the tests. Almost immediately, I encountered the following error:

20:59:45 [DEBUG] (1) agentai::agent: Agent iteration: 0

20:59:45 [DEBUG] (1) reqwest::connect: starting new connection: [https://generativelanguage.googleapis.com/](https://generativelanguage.googleapis.com/)

Error: Web call failed for model 'gemini-2.5-flash (adapter: OpenAI)'.

Cause: Request failed with status code '400 Bad Request'. Response body:

[{

"error": {

"code": 400,

"message": "Function calling with a response mime type: 'application/json' is unsupported",

"status": "INVALID_ARGUMENT"

}

}

]

The reason is straightforward: Google’s OpenAI compatibility layer does not support function calling and structured output (JSON mode) in the same API call. So, what does this mean for agentai? I will document this limitation, create tests to monitor its status, and recommend that users interact with the Gemini API directly for such use cases.

Why is this effort of testing and verifying different LLM providers so important? It’s crucial for bringing consistency to my library and ensuring a uniform experience for all users. The landscape of leading LLM providers is always evolving. Examples and documentation are vital parts of any library, as they demonstrate to new developers what can be achieved. This is why I invest effort in keeping them up-to-date. These aspects are often overlooked, but automating and enforcing these tests ensures they remain current and reliable.